General information on SOFA

General

Head-related transfer functions (HRTFs) describe the spatial filtering of the incoming sound due to the listener's anatomy. HRTFs are crucially important for the binaural reproduction of virtual acoustics. HRTFs have been measured by a number of laboratories and are typically stored in each lab's native file format. While the different formats are of advantage for each lab, an exchange of such data is difficult due to incompatibilities between formats.

The spatially oriented format for acoustics (SOFA) aims at representing spatial data in a general way, allowing to store not only HRTFs but also more complex data, e.g., directional room impulse responses (DRIRs) measured with a multichannel microphone array excited by a loudspeaker array. In order to simplify the adaption of SOFA for various applications, examples of implementation of the format specifications are provided together with a collection of exemplary data sets converted to SOFA.

Typical measurement setups

One of the first publicly available HRTFs were those of a dummy-head microphone measured in an anechoic room [1]. Two microphones placed at the ear simulators were used for the recordings and one loudspeaker was used for the signal excitation. The loudspeaker was moved to the desired elevation and the mannequin was rotated to the desired azimuth. Taken together, HRTFs for 710 spatial positions were measured at elevations from -40° to +90° in steps of 10° and 360° azimuthal range in steps of 5° and a constant distance of 1.4 m. The HRTFs are provided as impulse responses (IRs) with the length of 512 samples at a sampling rate of 44.1 kHz.

One of the first publicly available HRTFs measured in human listeners was the CIPIC database [2]. The measurements were performed at a constant distance of 1 m for 1250 spatial directions around the listener. The HRTFs are available for 43 listeners as IRs of 200 samples at a sampling rate of 44.1 kHz. Since then many other HRTF/DRIR databases have been made publicly available [3–8].

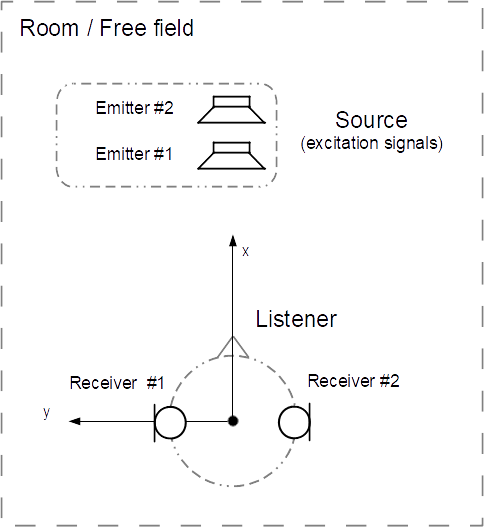

All those measurement setups have the following properties in common. In an anechoic chamber or in a room, excitation signals are generated and microphones are used to record the incoming signals (see Fig. 1, source). The measurement is repeated while varying the spatial position of the excitation source relative to the listener, which is done by varying the position of the listener, the sound source, or both in different dimensions. Binaural HRTF measurement setups use only two microphones to record the left and right ear signals. However, HRTF/DRIRs measurements may also consider multiple microphones, e.g., three microphones per head side in hearing-assist devices [7], tens of microphones arranged in an array structure at different directions and distances from the center [9], a multichannel microphone array arranged around the listeners in a reciprocal HRTF measurement system [10], [11], multichannel microphone arrays for measuring DRIRs [12] or various microphone positions in a room, e.g., for concert-hall acoustics measurements [13]. As a generalization, microphones and an object comprising those microphones can be identified. Thus, in this article, a microphone as the single receiver of the sound field is called the receiver, and the comprising all the receivers is called the listener, see Fig. 1 (source).

The sound source used for the excitation signal is not necessarily a single point source. Loudspeaker arrays were used, either to control the sound field surrounding the listener, e.g., wave-field synthesis [11], [14], [15], or higher-order Ambisonics [16], [17] or to control the radiation characteristics of the sound sources [18]. Similarly to the concept of listener and receivers, in this article, the particular sources creating the excitation signal are called emitters and the object comprising the emitters is called source. Note that a measurement setup with a source with multiple emitters and a listener with multiple receivers has already been considered [19].

In typical HRTF measurements, only the direction of the incoming signal is varied. In more recent setups also different sound-ear distances have been considered [4], [11], [20]. However, sometimes the variation of other parameters is of interest. For example, HRTFs were measured as a function of the head orientation relative to the torso [21], or the room IRs were measured as a function of the room temperature [22]. An HRTF file format should thus consider even such parameters.

Aim of SOFA

While designing SOFA, we set up the following requirements:

- Description of a measurement setup with arbitrary geometry, i.e., not limited to special cases like a regular grid, or a constant distance;

- Self-describing data with a consistent definition, i.e., all the required information about the measurement setup must be provided as metadata in the file;

- Flexibility to describe data of multiple conditions (listeners, distances, etc) in a single file;

- Partial file and network support;

- Available as binary file with data compression for efficient storage and transfer;

- Predefined description conventions for the most common measurement setups.

SOFA Specifications aim at fulfilling all those requirements. In a nutshell, the measurement setup is described by various objects and their relations. The information is stored in a numeric container based on netCDF-4. We consider a measurement as a discrete sampled observation done at a specific time and under a specific condition. Each measurement consists of data (e.g., an impulse response, IR) and is described by its corresponding dimensions and metadata. All measurements are stored in a single data structure (e.g., a matrix of IRs). A consistent description of measurement setups are given by SOFA conventions.

Other existing data formats

Beside SOFA, HRTFs have been stored using different formats, all of them having advantages and disadvantages. The CIPIC database [2] provides a file per listener in either a plain text or Matlab (Mathworks, Inc.) file format. The directions are hard coded, i.e., the index of an HRTFs corresponds to a predefined direction used in the measurements. While the representation of HRTFs from other directions is not allowed, anthropometric data have been stored within that format. The openDAFF package1, while similarly storing HRTFs only in a regular angular distance, uses a key-value system for the description of the metadata which seems to be very promising. Other databases such as LISTEN [3] and ARI [6], consist of an HRTF matrix and additional matrices describing the direction of the corresponding HRTF, thus, allowing to represent HRTFs from any direction. In that formats, HRTFs from each listener are stored in a separate file. In the database storing the HRTFs as a function of distance [4], the data are stored in a separate file for each distance. Combined with the necessity to store a separate file for each listener, those three latter formats would result in many files. The MARL-NYU database [23] harmonized the format of CIPIC, LISTEN, MIT, and others databases, and stores all those data in a single file. This concept seems to be promising when combined with a network interface and partial file access in the future. Most of those HRTFs are stored in Matlab formats, i.e., they use a Matlab file convention to store predefined matrices. In contrast, SDIF [24], a general format for storing audio-related data, has been adapted to HRTFs , allowing to store HRTFs of a single listener in a mixed text-based and binary representation. The concert-hall data [25], stored as compressed “.wav” files, are another example for a mixed-binary format, which further requires a description (separate text files) in order to being able to interpret the data. The HRTFs measured in rooms (e.g., [8]) are also Matlab files and the relationship between the data and the geometry of the measurement setup is provided in separate publications.

References

[1] W. G. Gardner and K. D. Martin, “HRTF measurements of a KEMAR,” J Acoust Soc Am, vol. 97, pp. 3907–3908, 1995.

[2] V. R. Algazi, R. O. Duda, D. M. Thompson, and C. Avendano, “The CIPIC HRTF database,” in IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, 2001, pp. 99–102.

[3] O. Warusfel, “LISTEN HRTF database,” 2003. http://recherche.ircam.fr/equipes/salles/listen/.

[4] H. Wierstorf, M. Geier, A. Raake, and S. Spors, “A free database of head-related impulse response measurements in the horizontal plane with multiple distances,” in 130th Convention of the Audio Engineering Society (aes), 2011, ebrief 6.

[5] T. Nishino, S. Kajita, K. Takeda, and F. Itakura, “Interpolation of head related transfer functions of azimuth and elevation,” J Acoust Soc Jpn, vol. 57, pp. 685–692, 2001.

[6] P. Majdak, M. J. Goupell, and B. Laback, “3-D localization of virtual sound sources: effects of visual environment, pointing method, and training.,” Atten Percept Psychophys, vol. 72, pp. 454–69, 2010.

[7] H. Kayser, S.D.Ewert, J.Anemüller, T. Rohdenburg, V. Hohmann, and B. Kollmeier, “Database of multichannel in-ear and behind-the-ear head-related and binaural room impulse responses,” EURASIP J Advances Sig Proc, Article ID 298605, 10 pages, 2009.

[8] M. Jeub, M. Schäfer, and P. Vary, “A binaural room impulse response database for the evaluation of dereverberation algorithms,” in 16th International Conference on Digital Signal Proc, 2009, pp. 1–5.

[9] I. Balmages and B. Rafaely, “Open-sphere designs for spherical microphone arrays,” IEEE Trans Audio Speech Lang Proc, vol. 15, pp. 727–732, 2007.

[10] D. N. Zotkin, R. Duraiswami, E. Grassi, and N. A. Gumerov, “Fast head-related transfer function measurement via reciprocity,” J Acoust Soc Am, vol. 120, pp. 2202–2215, 2006.

[11] M. Pollow, M., Nguyen, K.-V., Warusfel, O., Carpentier, T., Müller-Trapet, M., Vorländer, M., and Noisternig, “Calculation of head-related transfer functions for arbitrary field points using spherical harmonics decomposition,” Acta Acust United Ac, vol. 89, pp. 72–82, 2012.

[12] B. Khaykin, D., and Rafaely, “Acoustic analysis by spherical microphone array processing of room impulse responses,” J Acoust Soc Am, vol. 132, pp. 261–270, 2012.

[13] T. Pätynen, J., Tervo, S., and Lokki, “Analysis of concert hall acoustics via visualizations of time-frequency and spatiotemporal responses,” J Acoust Soc Am, vol. 133, pp. 842–857, 2013.

[14] A. J. Berkhout, “Holographic approach to acoustic sound control,” J Audio Eng Soc, vol. 36, pp. 977–995, 1988.

[15] S. Ahrens, J., and Spors, “Wave field synthesis of a sound field described by spherical harmonics expansion coefficients,” J Acoust Soc Am, vol. 131, pp. 2190–2199, 2012.

[16] M. A. Gerzon, “Ambisonics. Part two: Studio techniques,” Studio Sound, vol. 17, pp. 24–26, 1975.

[17] M. Zotter, F., Pomberger, H., and Noisternig, “Energy-preserving ambisonic decoding,” Acta Acust United Ac, vol. 98, pp. 37–47, 2012.

[18] B. Rafaely, “Spherical loudspeaker array for local active control of sound,” J Acoust Soc Am, vol. 125, pp. 3006–3017, 2009.

[19] S. Clapp, A. Guthrie, J. Braasch, and N. Xiang, “The use of multi-channel microphone and loudspeaker arrays to evaluate room acoustics,” in Proceedings of the Acoustics, 2012, vol. 131, p. 3208.

[20] S. Hosoe, K. I. Takanori Nishino, and K. Takeda, “Development of micro-dodecahedral loudspeaker for measuring head-related transfer functions in the proximal region,” in Proceedings of the IEEE Conference on Audio, Speech and Signal Processing (ICASSP), 2006, pp. 329–332.

[21] M. Guldenschuh, A. Sontacchi, and F. Zotter, “HRTF modelling in due consideration variable torso reflections,” in Proceedings of the Acoustics’08, 2008, pp. 99–104.

[22] G. W. Elko, E. Diethorn, and T. Gänsler, “Room impulse response variation due to thermal fluctuation and its impact on acoustic echo cancellation,” in International Workshop on Acoustic Echo and Noise Control (IWAENC2003), 2003.

[23] A. Andreopoulou and A. Roginska, “Towards the creation of a standardized HRTF repository,” in 131th Convention of the Audio Engineering Society (AES), 2011, Convention Paper 8571.

[24] D. Schwarz and M. Wright, “Extensions and applications of the SDIF sound description interchange format,” in Proceedings of the International Computer Music Conference, 2000.

[25] J. Merimaa, T. Peltonen, and T. Lokki, “Concert hall impulse responses - Pori, Finland,” 2005. http://www.acoustics.hut.fi/projects/poririrs/.

[26] H. Ziegelwanger and P. Majdak, “Continuous-direction model of the time-of-arrival in the head-related transfer functions,” J Acoust Soc Am, submitted.

[27] M. Noisternig, F. Zotter, and B. F. Katz, “Reconstructing sound source directivity in virtual acoustic environments,” in Principles and Applications of Spatial Hearing, Y. Suzuki, D. S. Brungart, and H. Kato, Eds. Singapore: World Scientific Publishing, 2011, pp. 357–373.